1.

List & Remove images

docker

images -a

docker

rmi image-name1 image-name2

2.

List & removeDangaling Images

docker

images -f dangling=true

docker

rmi $(docker images -f dangling=true -q)

3.

Remove all images

docker

rmi $(docker images -a -q)

4.

List & Remove images by pattern

docker

ps -a | grep "pattern"

docker

images | grep "pattern" | awk '{print $1}' | xargs docker rm

5.

List & Remove containers

docker

ps -a

docker

rm container1_id container2_id

docker

container rm contianer-name

6.

Remove container upon exit

docker

run --rm image_name

7.

List & Remove all exited containers

docker

ps -a -f status=exited

docker

rm $(docker ps -a -f status=exited -q)

8.

Listing & Removing using Multiple

filters

docker

ps -a -f status=exited -f status=created

docker

rm $(docker ps -a -f status=exited -f status=created -q)

9.

List and Remove all containers by pattern

docker

ps -a | grep "pattern”

docker

ps -a | grep "pattern" | awk '{print $3}' | xargs docker rmi

10.

Stop and remove all containers

docker

ps -a

docker

stop $(docker ps -a -q)

docker

rm $(docker ps -a -q)

docker

stop container-name

11.

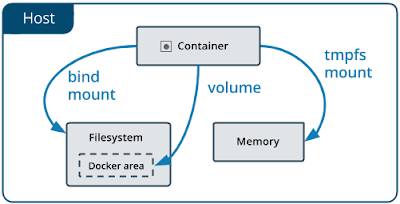

List & Removing Volumes

docker

volume ls

docker

volume rm volume_name volume_name

12.

List and remove all dangling volumes

docker

volume ls -f dangling=true

docker

volume rm $(docker volume ls -f dangling=true -q)

13.

Remove Volume and its container

docker

rm -v container_name